FastFlood Docs

Calibration

The calibration process

Calibration is the process of tweaking the input paramters and landscape description in the model in order to obtain optimal fit with observations. In this process, you correct for input data errors, uncertainties, and incorrect model assumptions. Validation is the process of applying a calibrated model to another event to validate its predictive power on unseen data. Calibration and validation is often required for application of physically-based models in an operational or official context. There are a couple steps to undertake when doing a calibration.

- Gather observation data

- Define an objective function

- Choose parameters for calibration

Observation data

Observation data could be of many types, but must relate to a model output variable. In the case of FastFlood, flood extent or peak discharge values are logical options. Flood extent can often be obtained using remote sensing data. Sentinel-1 radar estimates of water cover on the land might be used to generate a flood map. Alternatively, interviews or extrapolation of measurements might be used. For discharge, the peak values are required as FastFlood is a-temporal, and only predicts peak flow heights and peak discharges. The estimated hydrographs are not fit for calibration and validation.

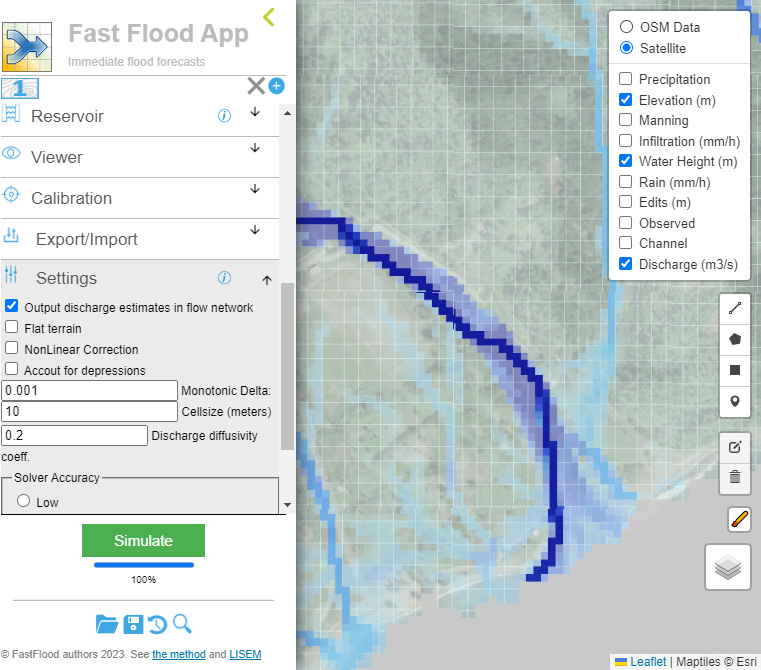

We can first visualize the discharge in the model.

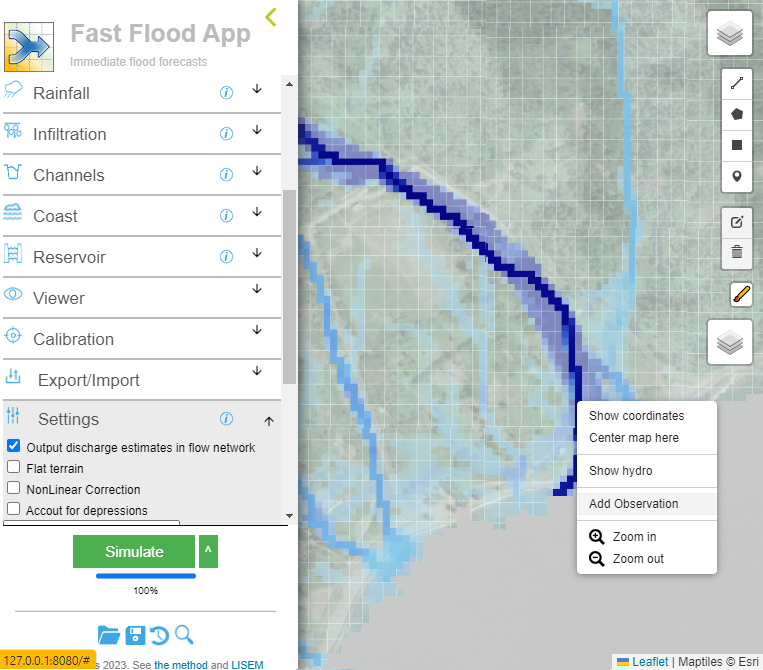

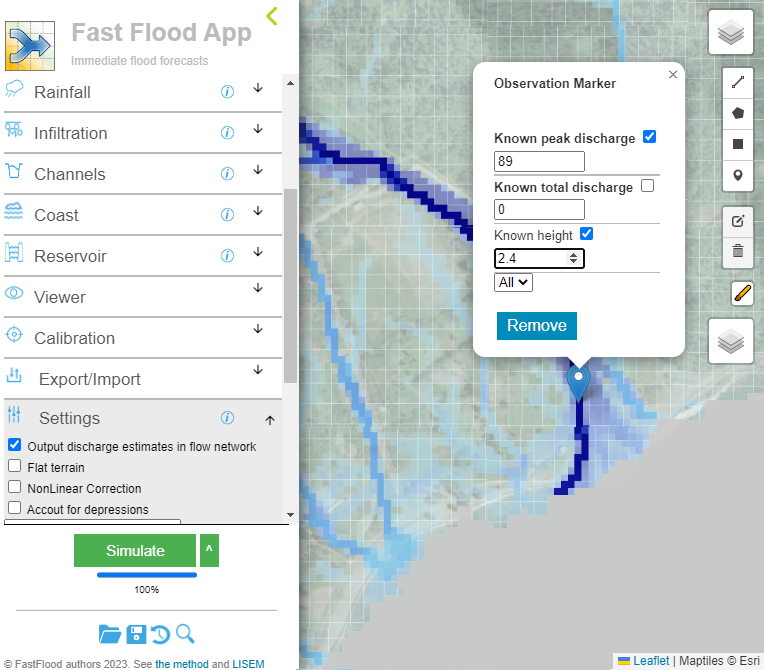

Then, we can add an observation on the right location. We can specify either peak discharge, total discharge, or maximum flow height.

Then, we can add an observation on the right location. We can specify either peak discharge, total discharge, or maximum flow height.

Objective functions

The objective function is a function that takes model output, the observation data and returns a single number indicating goodness-of-fit or model error. Many such metrics exist. For binary data (flood/no-flood), you might classify the pixels into TP (true positive), TN (true negative), FP (false positive) and FN (false negative), and N is the total count ((TN+TP+FN+FP)) Then, there are

- Percentage Accuracy = (TN + TP)/(TN+TP+FN+FP)

- Cohens Kappa =(((TP + TN)/N) - ((TP + FP)/N + (TN + FN)/N))/(1 - ((TP + FP)/N + (TN + FN)/N))

- F1 score = 2 * TP/(2*TP + FP + FN)

- Matthews correlation coefficient = (N * TP - (TP+FP)(TN +FN))/((TP+FP)(TN +FN)(N-(TN +FN))(N-(TP+FP)))

Each of these have some benefits and drawbacks related to the importance of certain aspects of the comparison. Try them all and decide for yourself in the end. Percentage accuracy is a safe option, but can for large areas over-prioritize under-prediction. For variables with real values, other metrics can be used

- Pearson correlation

- RMSE

Parameter selection

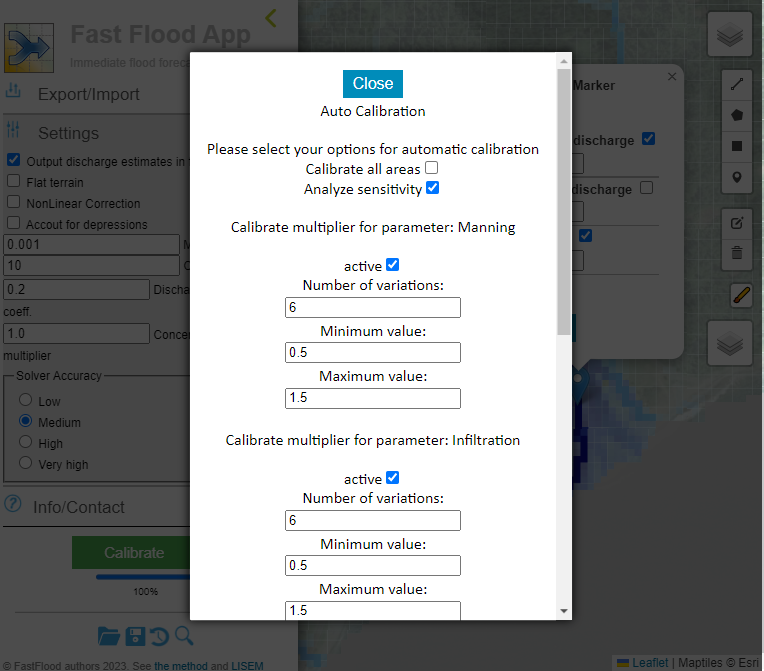

In order to improve the model performence, the right parameters must be altered. It is not desireble to alter too many parameters, as each unique combination must be tested, resulting in many simulations. Typically, mannings surface roughness and the infiltration rate (Ksat) should be taken as calibration parameters. In case of levee-systems, channel dimensions might be an additional option to improve fit with channel design capacity.

Calibration algorithms

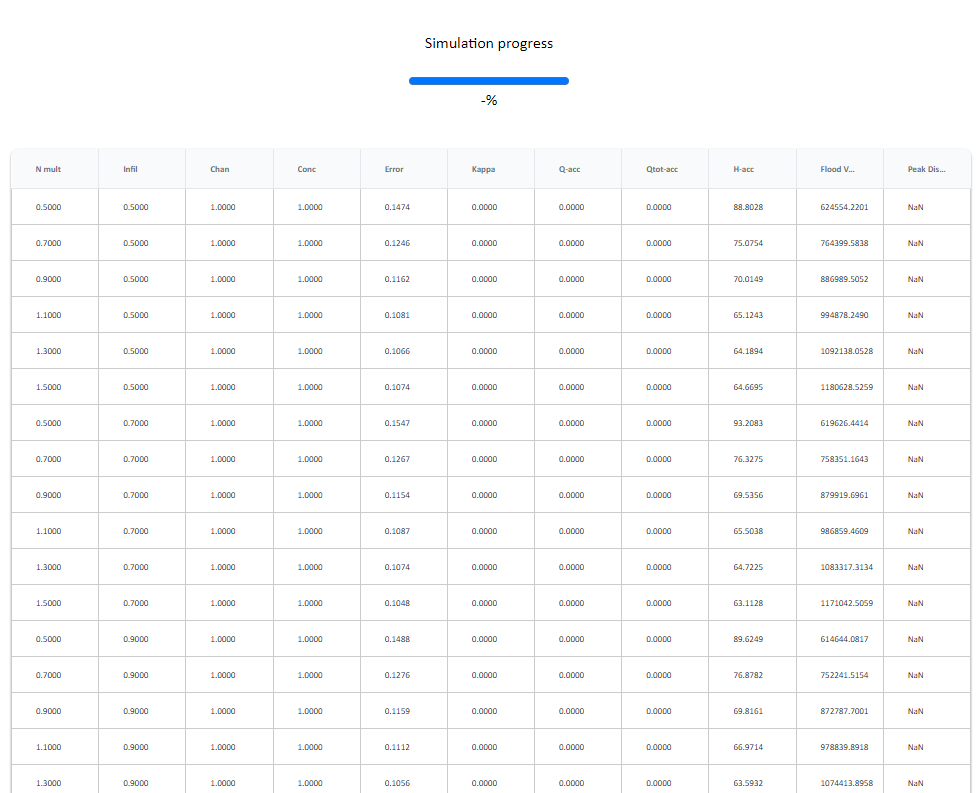

The primary way to calibrate once the above-choices have been made is to simulate using each unique combination of input parameters. Define a number of parameter values for each parameter. For example, 50, 70, 90, 110, 130 and 150 % of the original value. Using the built-in calibration multipliers to re-scale the input values/maps. Note that the number of parameters exponentially increases the number of simulations (2 parameters -> 6*6=36 simulations, etc..). If you require higher accuracy, you might define a narrower search area close to the optimal simulation that comes out of the first calibration attempt.

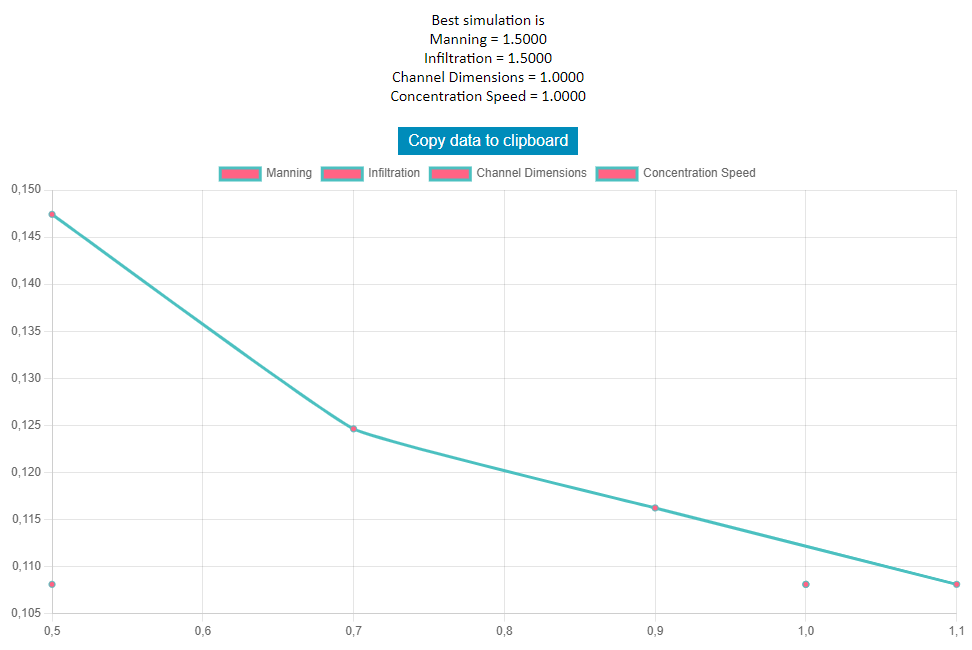

The finalized calibration will show a calibration graph, indicating the final optimal settings and how those relate to the parameter space.

Currently, brute-force approach is available, trying multiple combinations of parameter values.

Currently, brute-force approach is available, trying multiple combinations of parameter values.

In the future, we aim to include automatic calibration using gradient descent, which doesnt suffer from the exponential increase in number of simulations. This algorithm instead searches the n-dimensional parameters space using gradients to step to lower error values.